Saltcorn copilot: build Saltcorn applications with generative AI

Published on

Together with Saltcorn 0.9.2, we are releasing a CoPilot module that will help you build applications with natural language using generative AI. The premise of no code platforms like saltcorn has always been to enable people with limited software engineering skills to build software, and generative AI has the potential to significantly facilitate that process. Our initial testing in this area is showing strong results, and the copilot module is now ready to play with. You can today use the Saltcorn copilot to design databases and build actions.

To get started, you need a generative AI backend (an openAI account, an openAI compatible server such as local.ai or a local llama.cpp installation); you also need a Saltcorn instance updated to 0.9.2. Then go ahead and install the co-pilot module from the saltcorn module store.

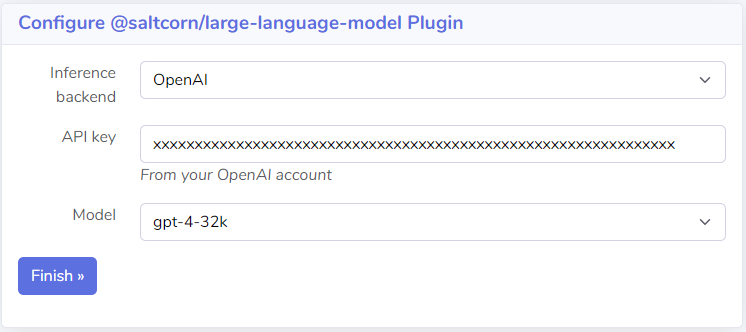

This will install its dependency, the large-language-model module. You should configure the large-language-model module with your openAI account or compatible server details. If you have not already done so when running the copilot, it will remind you to do this.

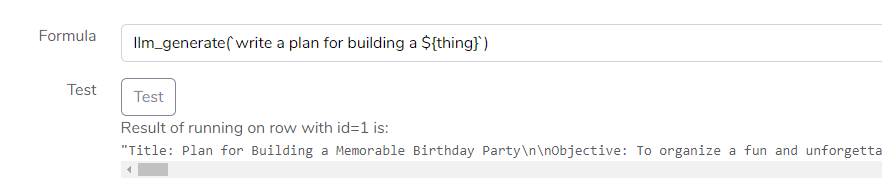

The large-language-model module on its own will give you a function you can use in your calculator variables to prompt the generative AI. This means you can now build applications that use generative AI to process data for the user. A quick example; here we create a calculated field that is generating a plan for something that the user has entered, by creating a calculated field based on the llm_generate function:

That's it! Now you can go ahead and connect that to a form going into a show view where the plan will be filled and shown to the user. Hurray! But that is not the topic of this blog post, the copilot build assistant is.

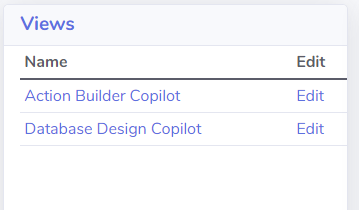

We are releasing two copilots today: one for designing databases based on an application description; and one for creating code for action for existing databases. Unlike in the previous example, here the generation is all happening at build time. The end user will see the application built by generative AI but the AI itself will no longer be in the loop when the end user uses the product.

Once the large language model module has been configured you should see two new views in your list of views: database design copilot and action builder copilot.

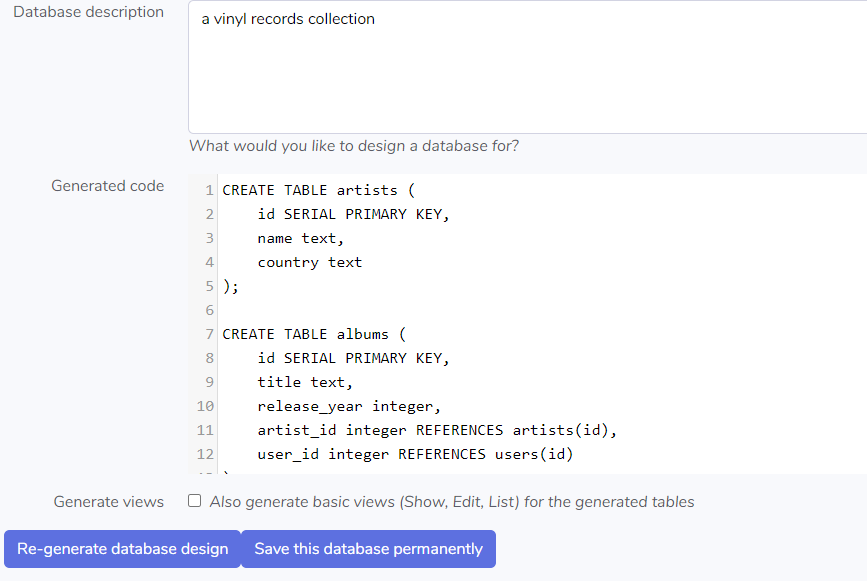

In the database builder you are asked to describe the application for which you want to design a database. The database builder is intended to be the first thing you run when you start building the application because the database design is the foundation of any Saltcorn application. Some example.descriptions we have tried here are:

- A bicycle rental business

- A holiday rental business

- A vinyl record collection

- An employee database

- An e-commerce application selling socks

But the possibilities are endless and this is a lot of fun exploring. In a future version we will generate a entity relational diagram you can browse before committing to a final database design.

To take some of the tedium out of building a minimal application scaffold we have also included an option for creating basic views for all of the tables. There is a lot of scope for improving these generated views so please send in your suggestions.

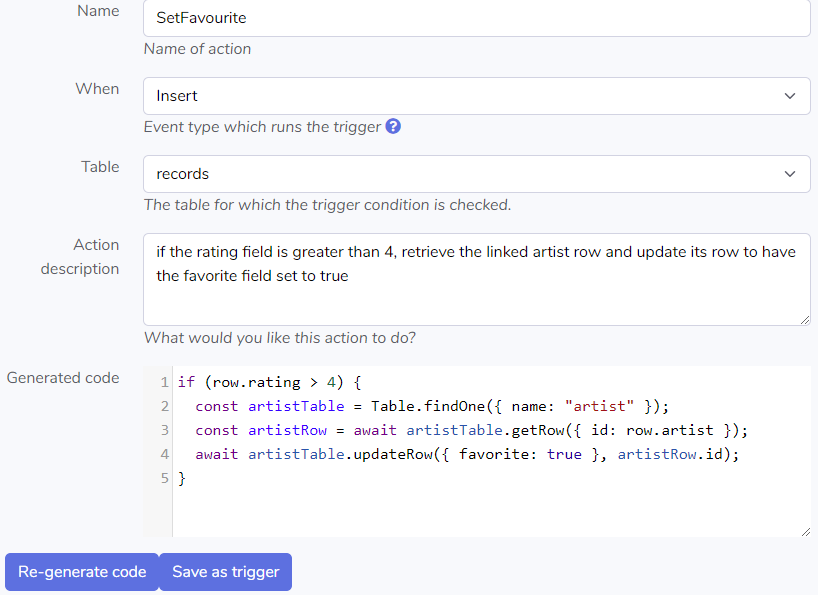

The action builder on the other hand is designed to be used to augment an existing application. The action build will help you to build scripts for complex actions that perform non-trivial manipulations of the database or interact with APIs.

Personally, I have never been convinced by the standard no-code story to making actions accessible to non-programmers, which is to build some sort of graphical editor for a workflow diagram where you connect boxes with arrows. It seems that any non-trivial application would quickly become difficult to maintain using graphical workflow editors.

Generative AI offers an alternative route for building no code actions: the user can describe them in a natural language which is then translated into code by the generative AI. This is what the action builder does. It augments the user's action description with the documentation of the available API, and then uses the code generating abilities of large language models to generate the code for the specified action. Beta users report this works surprisingly well with the long context version of GPT-4.

The database design and the action builder copilots are released today but there are plenty of ideas for the future:

- HTML builder: build snippets of static HTML that can be styled by a description. (E.g. "a button looking like a squished drop of water"). This could be used as library components or as static pages.

- Style builder: a copilot just for building CSS styles for custom components inside the Saltcorn drag and drop builder.

- View builder: the ne plus ultra of Saltcorn generative AI. Simply describe your views and it shall be done. The sounds far-fetched but we in fact have a lot of information that helps in this process, in that the database structure already exists, which should help in generating a working prompt.

Building this has been a huge amount of fun. (I got so swept up into building it that I completely forgot to attend a fancy Christmas lunch). There's a lot of work going on in the no-code community right now in terms of how to integrate generative AI. Many plugins for various platforms will help you use LLM prompts to process data from the user. Saltcorn is probably a bit late to that game (thanks for saving our seat) but here we are, now you can do it with the large-language-model module.

However, the more interesting problem to think about is how generative AI can help in building the applications themselves. The naive dream is that we can just describe an application and a generative AI will build it, text-to-app, job done. I think that would miss out on a lot of the opportunities for no-code in customizing applications to very specific needs that emerge through collaboration with the domain experts and the stakeholders. The way we are outlining this proposal is to use generative AI in very specific application building steps where the context builds up over time and is validated by the users. This buildup of context in turns help to build more specific prompts that guide more difficult code generation demands. That's our thinking for the moment and we'll see how that pans out in the next few months.